0 5 3 7 2 9 1 4 6 8 2 5 7

6 1 8 2 5 7 3 9 4 0 5 6 2

4 7 2 0 6 1 8 5 9 3 7 0 4

9 3 5 6 0 2 4 7 1 8 0 9 5

1 8 6 4 7 3 5 0 2 9 6 1 0

2 9 1 5 8 6 0 4 3 7 1 2 9

7 2 4 9 1 5 6 8 0 3 4 7 2

5 6 9 1 3 4 2 7 8 0 9 5 0

8 4 7 3 5 9 1 0 6 2 8 4 3

3 1 5 8 4 7 9 6 2 5 0 3 1

6 7 2 4 9 1 3 5 0 8 7 6 4

9 5 8 6 2 0 7 1 4 3 5 9 6

Main variable of interest

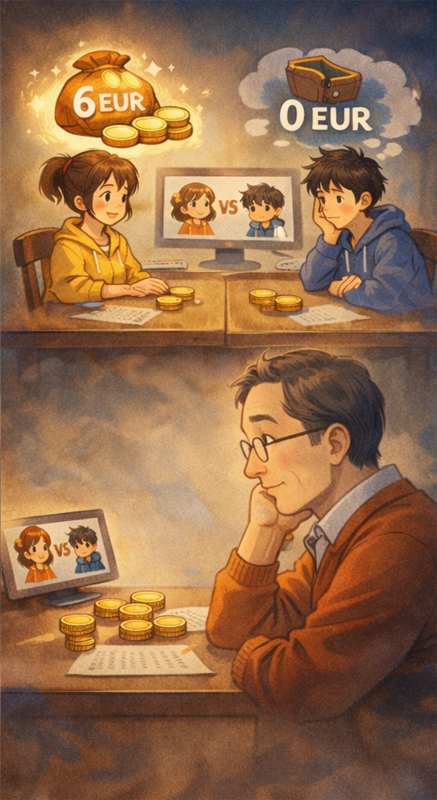

The inequality implemented by spectator j is given by: \[ e_j = \frac{\left| \text{Income worker}\ A_j - \text{Income worker}\ B_j \right| }{\text{Total income}} \in \left[ 0, 1 \right] \]

where Worker \(A_j\) is the worker with higher pre-redistribution earnings.A higher value of \(e_j\) denotes lower inequality aversion:

- \(e_j = 1\) means no redistribution.

- \(e_j = 0\) means 50-50 redistribution.